Tracing the Arc of Energy Efficiency: History and Future Outlook of Measurement & Verification

In our last issue, we analysed the key role of AI-powered Measurement & Verification in the decarbonization of the buildings sector. We also introduced some of the basic key concepts that are behind energy savings estimations. In today’s post, we’ll take a step back and look at a brief history of energy efficiency verification protocols. In the last section, I’ll share a few notes about how I see the future of M&V.

A brief history of Measurement & Verification

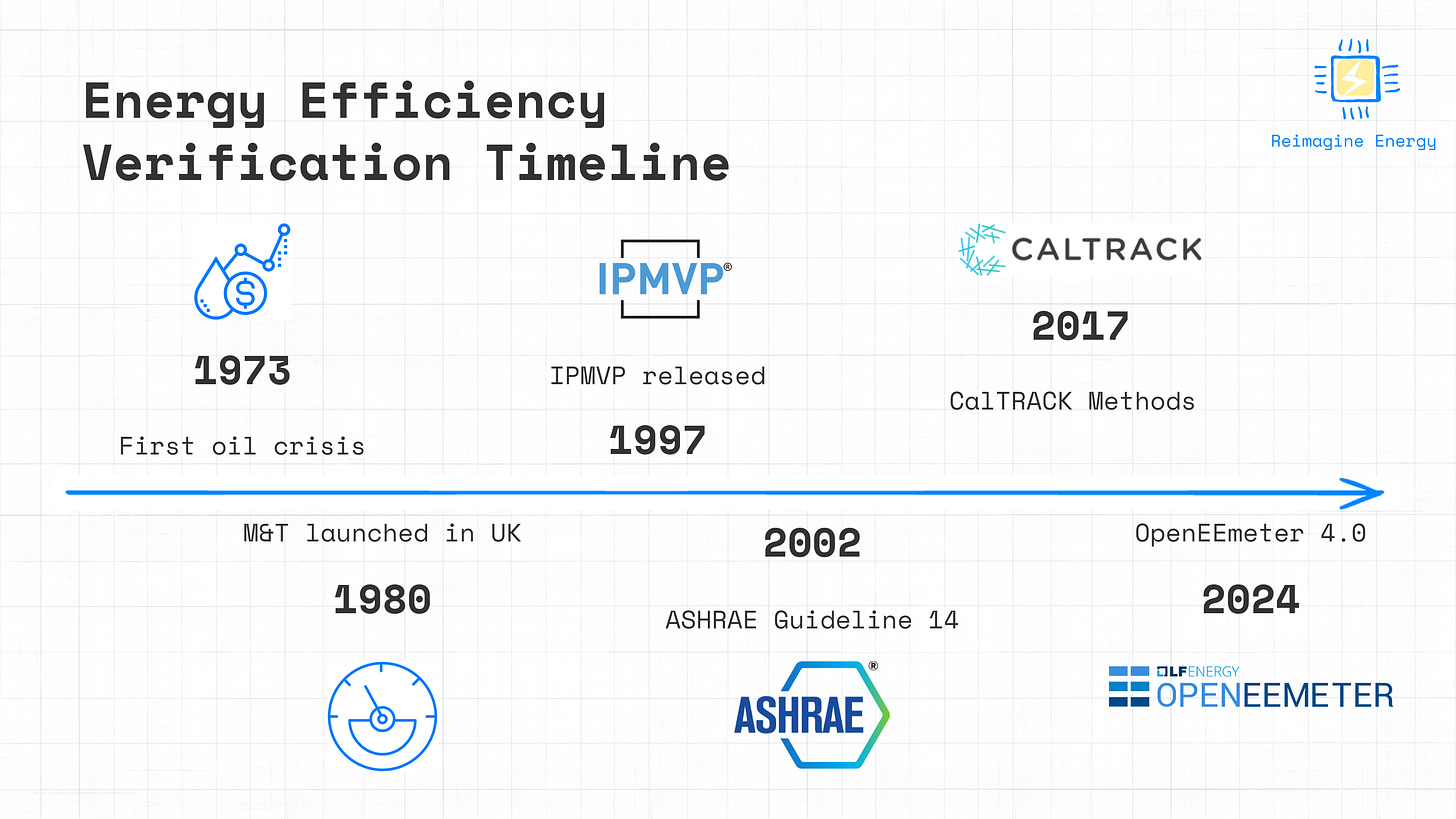

1970s

The roots of formal M&V practices can be traced back to the energy crises of the 1970s. During this time, the need for energy conservation became evident, leading to the initial development of energy management practices. The discipline of energy management, as an independent field, started to take shape following the initial oil crisis in 1973 and was firmly established in the aftermath of the second oil crisis in 1979, when a significant surge in real energy prices was observed. However, at that time, formal Measurement and Verification methodologies had not yet been devised, nor was there a uniform method for validating energy savings.

1980s

The 1980s were marked by the first appearance, both in UK and the US, of the first monitoring methodologies. Monitoring and Targeting (M&T), an energy management technique to improve energy efficiency, was first launched as a national program in the UK in 1980. It involved the systematic process of collecting, analyzing, and using energy consumption data to identify areas where energy use could be optimized. In the United States, the initial versions of Measurement and Verification systems were developed to monitor the effectiveness of utility programs. These systems focused on tracking metrics like the number of homes audited, contractors trained, and the involvement of banks in loan programs, rather than quantifying actual energy savings in kilowatt-hours.

1990s

Early in 1994, the United States Department of Energy initiated a collaboration with industry stakeholders to create a standardized approach for assessing the impact of energy efficiency investments. This collaborative effort was driven by the need to address existing hurdles in efficiency improvement projects and to foster a unified methodology for M&V practices.

By 1996, this collaboration resulted in the publication of the North American Measurement and Verification Protocol (NEMVP)1, which presented a set of methodologies devised by a committee of seasoned industry professionals. This early version, primarily aimed at audiences in North America, laid the foundational principles for M&V practices.

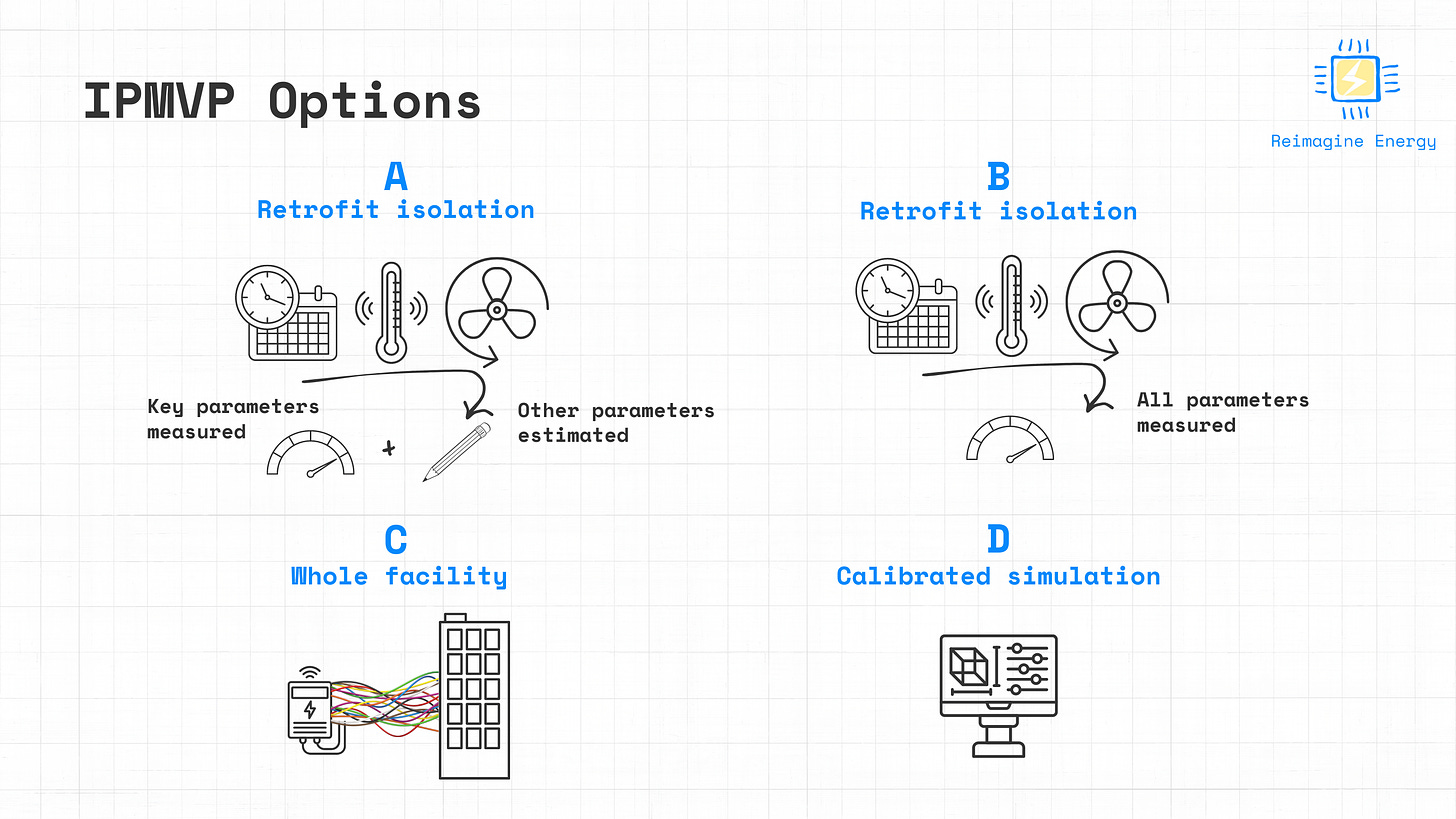

The protocol's reach and influence rapidly extended beyond North America, drawing interest and contributions from international experts and organizations. To better reflect its expanded scope and international adoption, the protocol was renamed the International Performance Measurement and Verification Protocol (IPMVP) in 1997. The IPMVP defined four main options for measuring and verifying energy savings. A complete description of each option can be found on the IPMVP official page, but an intuitive way to explain them would be:

Option A (Retrofit Isolation: Key Parameter Measurement): Isolates specific equipment's energy use with partial measurements and some estimated parameters to ensure savings are not significantly impacted by assumptions.

Option B (Retrofit Isolation: All Parameter Measurement): Requires full measurement of all energy flows for targeted equipment or systems, providing high certainty in savings without any stipulations.

Option C (Whole Facility): Utilizes whole building energy data from utility meters to evaluate collective energy efficiency measures impacts, capturing overall savings including indirect effects.

Option D (Calibrated Simulation): Employs calibrated simulation models to estimate energy savings, suitable for complex scenarios where direct measurement is challenging or data is unavailable.

The standardized approach from IPMVP marked a substantial change, moving on from initial estimation attempts that simply involved comparing pre- and post-project utility bills. Linear regression methodologies were introduced, with the goal of analysing historical utility data to normalize consumption figures for external variables. This advanced version of the whole-facility approach marked a significant progression from simple bill comparisons to a more analytical and structured methodology.

2000s

The 2000s were inaugurated with the publication of ASHRAE Guideline 14-20022, which aimed to establish standardized procedures for calculating energy and demand savings. Developed by the American Society of Heating, Refrigerating and Air-Conditioning Engineers (ASHRAE), the guideline was designed to provide a comprehensive set of principles for ensuring the reliability and accuracy of savings calculations in commercial transactions.

ASHRAE Guideline 14 introduced three distinct engineering approaches to M&V and savings determination, emphasizing the importance of maintaining savings estimate uncertainties below prescribed thresholds. The three approaches proposed: Retrofit Isolation, Whole Facility, and Whole Building Calibrated Simulation, echoed IPMVP options, offering varying methodologies for measuring energy use and demand.

The guideline's development was driven by the need for a standardized set of calculations that could facilitate transactions between energy service companies (ESCOs), their clients, and utilities opting to purchase energy savings. One of the key novelties compared to IPMVP was the introduction of specific metrics for evaluating the validity of M&V models, setting clear thresholds for net determination bias and maximum savings uncertainty.

2010s-2020s

Following 2010, there were several updates to both the ASHRAE Guideline 14, and IPMVP.

In 2017, a new methodology, called CalTRACK Methods3, was published. The methodology was developed by the California Energy Commission, the California Public Utilities Commission, Pacific Gas and Electric Company, and a group of energy experts. These methods aimed to bring consistency and transparency to energy efficiency calculations, particularly focusing on the grid impact of energy savings. They yield whole building, site-level savings outputs and are designed for energy efficiency procurement purposes such as Pay-for-Performance and Non-Wires Alternatives. These methods were developed through a consensus process and are versioned to reflect the guidance of the working group.

The CalTrack methods were complemented by the release of an open-source toolkit designed for standardizing and simplifying the process of calculating normalized metered energy consumption (NMEC) and avoided energy use. This toolkit, known as OpenEEmeter4, provides a comprehensive suite of tools for accurately estimating energy savings at the meter level. OpenEEmeter offers a robust open-source framework for applying the CalTRACK methods, delineating a series of approaches for computing and summarizing estimates of avoided energy use for a single meter, which are particularly applicable in Pay-for-Performance schemes. At the time of writing this article, OpenEEMeter 4.0 has just been released to the public.5

The future of Measurement & Verification

Now that we have a pretty clear idea of what the history of M&V looks like, let’s try to look ahead: what does the future of M&V look like?

From rudimentary utility bill comparisons to sophisticated, data-rich methodologies enabled by AI, the journey of energy efficiency verification represents a microcosm of technological evolution within the broader energy sector.

The early days approach to simply compare pre- and post-intervention utility bills, though a logical starting point, quickly revealed its limitations. The myriad variables influencing a building's energy consumption, such as weather fluctuations and occupancy changes, demanded more advanced approaches. This necessity gave rise to the development of retrofit isolation methods and the incorporation of regression models to better account for these influencing factors. These advancements, while significant, often required complex, resource-intensive processes not suited to all projects.

Today, with the adoption of Advanced M&V Tools, often referred to as M&V 2.0, we’re looking at the dawn of a new era in the field. After several years working in this field, I identified a few key trends that are redefining the landscape of energy efficiency verification:

Whole-facility verifications without additional hardware: The deployment of smart meters is advancing at an unprecedented pace worldwide. In Europe, there’s already multiple data portals where real-time, high-frequency, energy consumption data can be accessed seamlessly via API6. At the same time, the rapid advancements in AI and cloud computing are enabling the construction of highly accurate building models in mere seconds. This convergence of technology and accessibility eliminates traditional barriers to entry for M&V, making it possible to quickly verify whole-facility savings with zero additional hardware or cost.

Enhanced retrofit isolation through IoT: On the other end of the spectrum, large buildings are becoming increasingly monitored, often equipped with thousands of sensors generating vast datasets. This abundance of data allows for a new level of retrofit isolation verification, where millions of data points can be analyzed through sophisticated AI algorithms. The granularity of this data provides an unparalleled insight into the building's energy dynamics, allowing for precise identification and verification of savings from specific energy conservation measures.

Digital twins - the future of Option D: By synthesizing synthetic data and embedding physics directly into machine learning models, we can tailor energy-saving solutions to each building's unique characteristics, while generalizing beyond the confines of existing datasets. This also enables the simulation of rare events and the exploration of a broader spectrum of conditions, thereby enhancing the reliability and applicability of energy models. Digital twins will act as a nexus between the physical and digital realms, offering dynamic platforms that continuously learn and adapt, marrying the rigor of physics with the flexibility of AI. This hybrid modeling approach promises to refine our understanding of building energy dynamics and revolutionize the way we implement and benefit from energy efficiency measures.7

Expanding M&V to demand-side flexibility: With distributed energy resources introducing significant intraday price volatility, modern M&V tools must evolve to accurately assess energy savings while distinguishing between long-term energy efficiency and one-off Demand Response events. This includes incorporating demand-side flexibility analyses within energy savings verifications, ensuring M&V practices remain relevant and effective in a rapidly changing energy landscape. I recently joined an IPMVP committee that will focus on establishing guidelines for demand-side flexibility events verifications. More updates will come as we advance in the process.

That’s it for today! In the next post we’ll have our first real-world case study: I’ll show how at Ento we leveraged AI and cloud computing to verify energy efficiency savings for a group of almost 15 thousand public buildings across 74 different municipalities in Denmark. See you in two weeks!

https://www.osti.gov/biblio/216306

https://www.eeperformance.org/uploads/8/6/5/0/8650231/ashrae_guideline_14-2002_measurement_of_energy_and_demand_saving.pdf

https://docs.caltrack.org/en/latest/methods.html

https://lfenergy.org/projects/openeemeter/

You can find the release info here, and experiment yourself with the Python library.

If you’re interested in digital twins have a look at Giuseppe Pinto’s newsletter